This blog post is the result of a recent discussion started on the LinkedIn HubSpot Partners Group about PDF files and duplicate blog content penalties. (Note: This group is a closed group, so you won’t be able to view the discussion if you’re not a member, sorry.)

I made a few suggestions in the LinkedIn discussion about hiding PDFs from search engines, but I realized that this topic required a much longer treatment to be useful. For this blog post I’m going to focus on using PDFs in an inbound marketing website. But my suggestions are also applicable for most business websites.

Here are some examples of PDF content you might offer on your website:

- Your own premium content, like ebooks, tip sheets or whitepapers for lead generation purposes.

- Content intended for printing, like worksheets, maps, how-to lists, mind maps, etc.

- Marketing collateral intended for downloading, printing, or redistribution, including brochures, data sheets, spec sheets, etc.

- Content from other sources that you are redistributing (with permission, of course), like articles, whitepapers, ebooks, etc.

In my opinion, the two biggest reasons why Inbound Marketers should consider hiding their PDF content form search engines are the duplicate content penalty and loss of leads.

Avoid the Duplicate Content Penalty

The duplicate content penalty is quite simple, so let’s get it out of the way now. Google and other search engines penalize websites that use the same content in different places on the same website.

Say you collect a number of related blog posts into an ebook PDF file and use that as a premium for lead generation. If the search engines robots can find that PDF, they will index all the text inside the ebook. Now, when the search engine sees those same portions of content in the old blog posts, it will flag those posts (or the PDF file) as duplicate content.

The easy solution is to make sure the search engines can’t find or read the PDFs. Duplicate content problem solved.

Protect Your Lead Generation Machine

As Inbound Marketers, you’re goal is to attract visitors to your website with quality relevant content and convert them into leads via an appealing offer. In many cases this offer is a piece of downloadable premium content, like a PDF file. The visitor completes a short form on a landing page to gain access to the PDF file.

It’s a basic lead generation technique, but it only works if the visitor completes the landing page form. If they can find your PDF offering directly through a web search, then they’ll likely view only the PDF file itself.

Even worse, by opening or downloading your PDF directly, they might never see your website and its accompanying navigation. They only see your PDF file, nothing else. All your hard work creating an accessible and useful website is wasted. And forget capturing their information or plugging them your marketing funnel. Not likely to happen. You’ve missed your chance.

Some marketers try to compensate by placing their website URL in the header or footer of their PDF, hoping that the curious reader clicks the link and visits.

Don’t count on it.

Be ruthless in forcing your visitors though your landing pages and opt-in forms. And the only way to enforce these opt-ins is to hide your PDF content from the search engines.

Fortunately, it’s not hard to hide PDFs and other files from compliant search engines like Google, Bing, Yahoo, etc.

The Right Way to Upload Files and Hide Them

First, upload your PDF to your website. In HubSpot’s COS you would follow the instructions provided here.

For other websites not using the HubSpot COS, you need to upload your file to a directory. You can create a unique directory for that PDF or you can have a directory that holds all of your PDF files and other downloads. If you choose to have a single download directory for all of your downloadable files, then make sure you protect it with an index file that prevents visitors from viewing a directory listing. If your website is installed on an Apache server, then you can add this simple line to the beginning of your .htaccess file:

Options -IndexesThis will prevent any directory listings.

If this change causes problems, then you will need to create an index file for your download directory.

In WordPress or other websites based on PHP, you can create an index.php file that contains the following line to redirect the visitor back to your home page:

<?php header("Location: /"); ?>For non-PHP websites, you can create index.htm, a simple HTML file to redirect prying eyes back to your home page:

<html>

<head>

<title>Forbidden</title>

<meta http-equiv="refresh" content="0;URL='http://www.yourwebsite.com/'" />

</head>

<body>

<p>Nothing to see here, go <a href="http://www.yourwebsite.com/">home</a>.</p>

</body>

</html>Upload the completed file to your download directory.

Modifying the Robots.txt file to Hide Pages, Files, and Directories from Search Engines

The robots.txt file contains instructions for blocking search engine robots (or search bots) from certain pages or directories. A compliant search bot will obey the instructions in your robots.txt file and skip indexing that content.

If you’re using the HubSpot COS for your website, then you can edit your robots.txt files using the instructions here.

For everyone else, you’ll need to find your robots.txt file, located in the root directory of your website. This directory usually contains your index page.

Open your robots.txt file in an FTP program or online file editor. Or, you can simply enter your domain name with robots.txt at the end (www.yourwebsite.com/robots.txt) and save the text file to your computer for editing in a text editor, like Notepad on Windows.

NOTE: Don’t edit the file in a full-fledged word processor like Word. Use a text editor that can save ASCII format

If your site doesn’t have a robots.txt file, don’t worry; it’s easy to create one. Use a text editor to create a new blank file named “robots.txt”.

Now you’re ready to edit your file.

If your file doesn’t have a line starting with “User-agent,” add this one now:

User-agent: *The User-agent refers to the specific search bot you are allowing to crawl your website. The * allows all search engines to crawl your website. You can edit your file further to block certain search bots, but that’s a subject for a future post or your own research.

Next, we want to block access to the directory we are using for our PDF files. In a WordPress website, you might create a “downloads” directory in your “wp-content” directory. Your full URL would look like this:

www.yourwebsite.com/wp-content/downloads

To block this directory in robots.txt, you would add the following line:

Disallow: /wp-content/downloads/You always list the directory path starting after your domain name. And pay close attention to capitalization. It counts.

If you started with a blank robots.txt file, your file would now look like this:

User-agent: *

Disallow: /wp-content/downloads/You can also block individual pages or files in the same way. You should always block the download page (also called the “thank you” page) that contains the download link for the PDF file, unless you choose to provide the download link only in the confirmation email.

The download page for an ebook at www.yourwebsite.com/ebookdownload.html would look like this:

Disallow: /ebookdownload.htmlThe same page in WordPress, www.yourwebsite.com/ebookdownload, would look like this:

Disallow: /ebookdownload]If you are using WordPress, you might want to consider using a search engine blocking plugin like PC Hide Pages to hide your download pages. It’s much easier to use than editing robots.txt.

To hide a specific PDF file (or other file) at www.yourwebsite.com/downloads/ebook.pdf you would enter:

Disallow: /downloads/ebook.pdf

Finally, this command will work to hide PDFs from most major search engines, but is considered non-standard:

Disallow: *.pdf

For more examples, take a look at Google’s very useful page on robots.txt.

You can also use one of several robots.txt file generators to create your file. Do a web search on the phrase “robots.txt generator.”

Using “no follow” Links

If you have PDFs that are available for download from any public page on your website (those pages not hidden by robots.txt or other means), then you should consider making all links to those files “no follow” links.

The “no follow” link will tell compliant search engines that you don’t want them to index that link; thus, hiding the PDF.

You’ll need to add rel=”nofollow” to your link, like this:

<a href=”www.yourwebsite.com/downloads/ebook.pdf” rel="nofollow">Download PDF</a>

As a Last Resort, Use PDF Security Settings to Hide Content

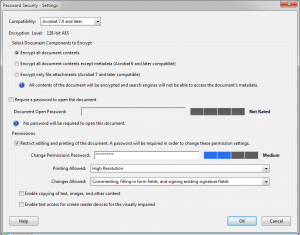

Many PDF creation and editing programs allow document creators to apply security settings to a PDF file. These settings include file opening and editing restrictions, plus search engine attributes.

Access restrictions on a PDF file means encryption, which requires a password. We’re going to set a password, then customize the permissions available to users and search engines.

For this example I’m using screens from Adobe Acrobat Pro X, but the settings terminology should be similar for most programs.

First, you need to access the security settings at the time of PDF creation or when you edit it afterward.

In Adobe Acrobat you can find the settings in Document Properties, under the Security tab:

You’ll need to select password security to set restrictions.

Then set the options like this:

- We want to encrypt all document contents to hide the text from search engines.

- We don’t want to require a password for opening the document, but we do want to set a password to restrict editing the document.

- We want to allow high resolution printing.

- We only want to allow readers to comment and fill forms.

- Do NOT enable copying of text, images or other content.

- Do NOT enable text access for screen reader devices. This is important for hiding the PDF text from search engines.

Confirm your security changes and save your PDF.

That’s it! Upload your PDF and set your robots.txt file.

What about PDF Content NOT Used for Lead Generation?

If you have PDF that you provide to your visitors without requiring a lead gen form, then you should still consider hiding the PDF files from search engines.

Why?

First, the duplicate content penalty. Second, you want visitors to have the full experience of your website. That won’t happen if they access the PDF files directly from a search engine.

Of course you don’t want to hide the fact that you have this PDF from the search engines, but do it right. Describe the content of the PDF on the page that features the download link. Make sure you describe what’s in the PDF and the benefits of downloading it.

Leave a Reply